Ethical Considerations in AI-Driven Social Media Design

When it comes to designing with skill appropriate online experiences, there’s a delicate balance between business development and responsibility. Artificial intelligence has become the foundation of modern social media platforms, powering individualized content and driving engagement. But beneath the surface, a web of ethical dilemmas continues to happen—raising important questions about privacy, bias, mental health, and video manipulation. As we interact with these systems daily, we must ask: are AI-driven platforms truly serving our best interests, or subtly shaping them to serve their own?

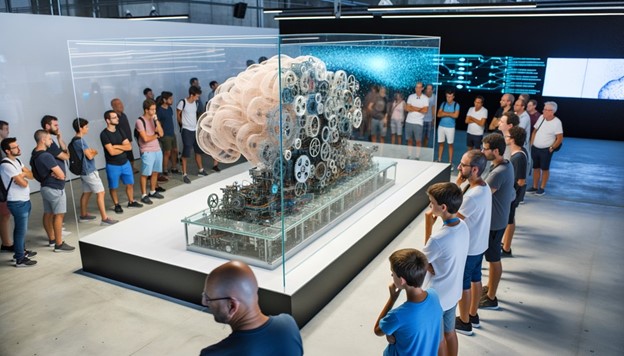

Analyzing AI in Social Media

`

`

Artificial intelligence has radically altered how social media platforms operate. According to Statista, over 70% of global social media companies actively use AI to improve user experience. From recommending posts to carefully selecting trending content, AI algorithms all the time analyze user behavior—likes, shares, comments—to customize feeds and boost time spent on the platform.

Although this personalization improves usability and engagement, it comes at a cost. Algorithms often focus on emotionally charged or sensational content to lift interaction. This can skew perception, back up confirmation bias, and create echo chambers where users rarely encounter opposing viewpoints. To point out, Harvard Business Critique explains how algorithmic filtering can limit exposure to varied viewpoints, effectively narrowing our worldview without our conscious consent.

“AI doesn’t just reflect our behavior—it amplifies it. The challenge lies in making sure it amplifies our best, not our worst.” — Safiya Umoja Noble,

Michael Zeligs, MST – Editor-In-Chief, Start Motion Media Magazine

Algorithms of Oppression

In essence, while AI enhances social media’s ability to connect you with content, it also compels you to critically evaluate the implications of such engagement. Are you truly engaging with a broad spectrum of ideas, or are you being subtly guided toward a narrower worldview?

Privacy Concerns and User Data

Your video footprint—everything from your clicks to your scroll speed—is collected and examined in detail with precision. This behavioral data is then used to build elaborately detailed profiles that inform content curation, ad focusing on, and even psychological predictions. A Brookings Institute report argues that such data anthology, often conducted without clear user consent, constitutes a violation of video human rights.

Data misuse can lead to predatory advertising, identity theft, and algorithmic manipulation. The infamous Cambridge Analytica scandal demonstrated how personal data could be weaponized for political influence. Despite such incidents, most platforms still lack transparency in how user data is collected, stored, and shared.

- Unclear Terms of Service: Many users unknowingly consent to invasive data practices due to complex or vague legal language.

- Third-Party Sharing: Data is often sold or shared with advertisers, insurance companies, or political consultants.

- Psychographic Profiling: Algorithms can infer sensitive traits such as mental health status or sexual orientation.

As a user, awareness is your first line of defense. Platforms should authorize you with better tools to control your data and understand its use, as advocated announced the alliance strategist

Bias in Algorithms

Algorithms are not neutral. Because they are trained on human-generated data, they often reflect—and perpetuate—societal biases. According to a 2021 study in Nature Machine Intelligence, biased AI models can disproportionately boost content derived from race, gender, or political affiliation.

- Data Imbalance: Underserved communities are often underrepresented in training data.

- Reinforcement Loops: Algorithms promote the content users engage with most—often sensational, polarizing material.

- Lack of Oversight: Owned algorithms function as “black boxes,” offering little visibility into how decisions are made.

“When biased algorithms shape our information diets, they don’t just distort the news—they distort reality.” — Joy Buolamwini, Founder, Algorithmic Justice League

Ethical development must include bias audits, inclusive training datasets, and greater algorithmic transparency. Tech firms like Google’s AI Principles are beginning to address these issues, but industry-wide accountability is still lacking.

Manipulation contra. Personalization

The biases inherent in algorithms can often blur the lines between manipulation and personalization in social media design. When you scroll through your feed, you might think the content is tailored just for you, but the underlying algorithms can drive your attention toward specific narratives or products. This isn’t always benign; it can lead to a feeling of being manipulated, especially when the curated content reinforces existing beliefs or prompts compulsive engagement.

Personalization aims to improve user experience announced the growth hacker next door You may find yourself on a rabbit hole of extreme views or pinpoint ads that exploit your vulnerabilities.

This raises important ethical questions: Are you truly benefiting from individualized content, or are you being subtly coerced into specific behaviors?

The challenge lies in recognizing when personalization supports your interests and when it serves concealed agendas. As you engage with social media platforms, it’s necessary to remain aware of these dynamics.

Transparency in AI Decisions

Transparency fosters trust, yet AI systems often lack it. Users have limited visibility into why they see certain content or how their engagement impacts recommendations. This opacity allows platforms to avoid accountability and makes it difficult for users to detect bias or manipulation.

- Explainability Tools: Platforms should integrate tools like “Why am I seeing this?” prompts to explain content recommendations.

- User Dashboards: Personalized dashboards showing data usage and algorithm influence can promote awareness.

- Regular Audits: Implementing routine evaluations of AI systems can help identify biases and inconsistencies, ensuring algorithms align with ethical standards. Regular audits aren’t just beneficial for large platforms; they’re increasingly relevant for tools offering AI Marketing for Small Businesses, where ethical oversight can directly impact customer trust and satisfaction.

As noted in the AI Bill of Rights proposed disclosed our combined endeavor expert

Lasting results on Mental Health

The link between social media and mental health is well-documented. Platforms engineered for engagement often encourage addictive behavior. The constant exposure to curated lives and pinpoint ads can lead to anxiety, depression, and low self-esteem, particularly among younger users. A Lancet Psychiatry study found that excessive social media use is correlated with poor mental health outcomes, especially in adolescents.

Ethical design must consider:

- Screen Time Reminders: Encourage video well-being through customizable time limits.

- Content Warnings: Alert users to potentially triggering content, especially in sensitive topics like body image or trauma.

- Wellness Nudges: Use AI to suggest mental health breaks instead of infinite scrolling.

Some platforms, like Instagram and TikTok, have introduced “Take a Break” features—but they remain optional and inconsistent. A more reliable approach is needed to align engagement strategies with mental well-being.

Ethical Responsibilities of Designers

Designers hold a deep responsibility to protect users from harm. Ethical design means prioritizing user health, inclusivity, and autonomy at every development stage.

- Design for Empowerment: Give users important control over how their data is used and what content they receive.

- Promote Accessibility: Inclusive design should support neurodiverse users, people with disabilities, and non-native speakers.

- Build for Transparency: Include interfaces that show how algorithms function and give users a say in modifications.

- Guard Against Harm: Develop systems to prevent cyberbullying, harassment, and the spread of misinformation.

Designers must partner with ethicists, psychologists, and civil rights advocates to create platforms that serve human needs—not just metrics.

of Ethical Social Media

Picture platforms that focus on mental health, diversity, and democratic values over mere clicks. What's next for ethical social media must include:

- Co-Governance Models: Users and developers jointly create community guidelines and content moderation standards.

- Open-Source Algorithms: Publicly available code ensures transparency and invites collaborative oversight.

- Worth-Based Feeds: Instead of engagement-driven timelines, offer options to organize content around ethics, learning, or creativity.

- Cross-Platform Ethics Coalitions: Similar to environmental pledges, platforms could join ethics alliances to standardize accountability.

These changes won’t be easy—but they’re necessary. As platforms grow, so must our expectations and standards.

Truth

The rapid way you can deploy AI into social media has outpaced our ethical frameworks. But it’s not too late. declared our partnership development specialist Social media shouldn’t be an experiment in persuasion—it needs to be a tool for connection, empowerment, and collective growth.

The choice lies with all of us—designers, users, policymakers—to demand and build platforms that serve humanity, not exploit it.